An Introduction to Terraform

What is Terraform

Terraform is a CLI tool that allows you as system administrators, developers, etc. to build your infrastructure via declarative configuration files. This approach is commonly referred to as "Infrastructure as code" (IaC), and there are many such tools and implementations offered by some of the largest cloud providers and enterprise focused companies. For example Amazon has Cloudformation, Microsoft has Azure Automation, and Red Hat provides the Ansible automation platform.

Terraform was developed and is maintained by Hashicorp as their IaC platform. To quote them:

Terraform allows you to build, change, and manage your infrastructure in a safe, consistent, and repeatable way by defining resource configurations that you can version, reuse, and share.

The tool was developed in such a way that it can work with any platform that provides APIs for managing infrastructure and you can even add your own custom "provider" if needed.

The system works by maintaining a "state" of what your infrastructure should look like (ex: 1 VPC, 3 subnets, 6 EC2 instances) and comparing that to what's in your configuration. This state is consulted before each of the various lifecycles in the terraform process, which allows it to know and show you what it thinks should be done before going ahead and applying those changes to the "real world".

Terraform is not restricted to working with IaaS solutions like AWS or Azure. It's also fully capable of interfacing with PaaS solutions like Kubernetes or Heroku as well as SaaS solutions like Fastly and GitHub.

Why should you use Terraform

Now that you have a basic understanding of what Terraform is, I'll explain why you should use it in your projects (when appropriate). Some of these apply to IaC in general, and others are unique to Terraform.

1. Single tool to manage all platforms

As I mentioned in the introduction section, Terraform works with nearly all popular cloud platforms and the list of providers is increasing all the time. If you have projects which are deployed into multiple providers (AWS, Azure, GCP) then you might have code for each of their various platforms each with its own syntax and tools to deploy the configuration. By using Terraform, you can simplify your various codebases to a single standardized format and syntax.

2. Consistent and repeatable processes

If you're not already using one of the aforementioned tools then you might be creating the resources you need either by hand via the platform's web console or via their APIs. Any time you do something like that, errors and mistakes can be introduced if you're doing this process multiple times, say for your dev, QA, and production environments. Using a tool like Terraform allows you to have confidence that your infrastructure will look exactly the same across each of your environments no matter how many times you destroy and rebuild it. The configuration can (and should) be version controlled. If you regularly use using Docker and Docker Compose these should be familiar ideas.

3. Extensive and extendable ecosystem

There are over 3,000 providers and nearly 13,000 modules currently listed on the terraform registry page. Using the pre-existing providers and modules will significantly cut down on the time it takes to write your code or migrate it into Terraform's ecosystem. Each module provides certain default values for it's variables, however you can override these values when you instantiate the module. In the case where you can't find a pre-existing module or provider you can simply write your own (and potentially commit it back to the community).

4. Visibility and simplicity of code

Terraform's way of defining resources is fairly human-readable so even if it's your first time looking at the code you should be able to quickly get an understanding of what's being created and tweak things if necessary. The project structure (if following best practices like splitting code into modules and naming the files the type of resource they will be creating) should also allow for easy at a glance understanding of the scope of the codebase.

Basic project structure

A Terraform project will largely consist of .tf (or .tf.json) files which include provider and resource definitions (IAM role, DigitalOcean droplet, VPC). In the simplest case your project structure will look something like:

my_tf_project/

├── main.tf

├── provider.tf

├── variables.tf

The provider.tf file will include, as you can probably guess, the providers you want to use. These provider definitions are composed of a name, source, and version constraint.

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 4.16"

}

}

required_version = ">= 1.2.0"

}

provider "aws" {

region = var.region

profile = "default"

}

From the code above, I've required the hashicorp/aws provider (aliased to aws). This gives me access to all of the resources that this provider has available to it. The source attribute states the name of the provider. By default Terraform will check the Terraform Registry for the requested provider.

The version attribute is an optional, but is highly recommended so that it installs a version that's compatible with your setup.

Next is the main.tf. In this example I'll include everything in a single file, however generally the practice is to break your code into separate modules.

resource "aws_instance" "app_server" {

ami = var.ec2-ami

instance_type = "t2.micro"

key_name = "aws-keypair"

subnet_id = var.subnets[0].id

tags = merge(

var.tags,

{

Name = "ExampleAppServerInstance"

}

)

}

Each resource block defines one component of the instructure. It should begin with the keyword resource followed by its type ("aws_instance") and then finally a name ("app_server"). You will often need to use variables from one module or resource in another. You can create an outputs.tf files defining which values you would like to be exposed. You can then reference them via code like var.aws_instance.app_server.<property-name> . The system automatically determines dependency graphs, so it will create the resource in the proper order without you needing to explicitly do so.

The final file to discuss is the variables.tf. This file will contain variables that you can then pass in and utilize elsewhere in your project. For example, the AWS region to create the resources in or a collection of common tags that should be applied to the resources.

Showcase

The gif below shows the steps to initialize and apply the code you have in your basic project. You will need to have already set up a user in the AWS IAM dashboard with a policy that permits them to create ec2 instances. You'll need the access_key_id and secret_access_key for that user to be stored in your awscli credentials file (~/.aws/credentials)

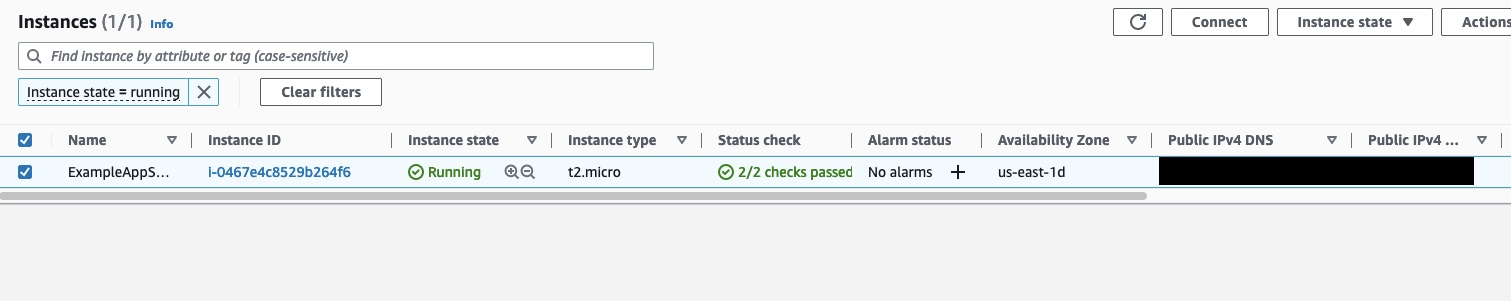

Assuming that your apply was successful you should now see an EC2 instance in your AWS console.

After confirming it worked you'll want to run terraform destroy so you don't get keep it running and get charged for it.

Next Steps

Now that you have an understanding of what Terraform is and whether you should use it

- Install Terraform

- Hashicorp prodivdes pre-compiled binaries for all major operating systems (Linux, Mac, and Windows) as well as the option to build it yourself via their GitHub repository.

- Read through the links in the resources section

- Read the next article in this series which guides you through using the commands and more advanced examples